Foreword

(1) Task description

This sample tutorial describes how to use Paddle to complete a machine translation task.

We will use the LSTM API provided by Paddle to build a sequence to sequence with attention machine translation model, and complete the machine translation from English to Chinese on the example data set.

(2), environment configuration

This example is based on Paddle open source framework version 2.0.

import paddle

import paddle.nn.functional as F

import re

import numpy as np

print(paddle.__version__)

# cpu/gpu环境选择,在 paddle.set_device() 输入对应运行设备。

# device = paddle.set_device(‘gpu’)

The output result is shown in Figure 1 below:

1. Data preparation

(1), data set download

We will use the English-Chinese sentence pairs provided by http://www.manythings.org/anki/ as a data set to complete this task. The dataset contains 23,610 bilingual sentence pairs in Chinese and English.

!wget -c https://www.manythings.org/anki/cmn-eng.zip && unzip cmn-eng.zip

!wc -l cmn.txt

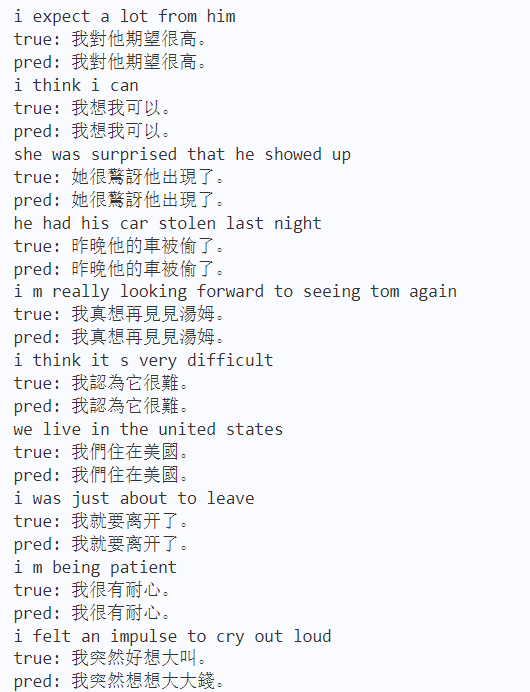

The output result is shown in Figure 2 below:

(2) Constructing the data structure of bilingual sentence pairs

Next, we read the bilingual sentence pair into the python data structure by processing the downloaded text file of the bilingual sentence pair. The following processing is done here.

For English, all English words will be changed to lowercase, and only English words will be kept.

For Chinese, for the sake of simplicity, word segmentation is not done, but word segmentation is done.

In order to make the subsequent program run faster, we obtained a smaller data set by limiting the length of sentences and only retaining sentences beginning with some English words. This resulted in a dataset of 5508 sentence pairs.

lines = open(‘cmn.txt’, encoding=’utf-8′).read().strip().split(‘\n’)

words_re = re.compile(r’\w+’)

pairs = []

for l in lines:

en_sent, cn_sent, _ = l.split(‘\t’)

pairs.append((words_re.findall(en_sent.lower()), list(cn_sent)))

# create a smaller dataset to make the demo process faster

filtered_pairs = []

for x in pairs:

if len(x[0]) < MAX_LEN and len(x[1]) < MAX_LEN and \

x[0][0] in (‘i’, ‘you’, ‘he’, ‘she’, ‘we’, ‘they’):

filtered_pairs.append(x)

print(len(filtered_pairs))

for x in filtered_pairs[:10]: print(x)

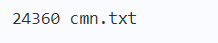

The output result is shown in Figure 3 below:

(3), creating a vocabulary

Next, we create Chinese and English vocabulary respectively. These two vocabulary will be used to convert English and Chinese sentences into a sequence of word IDs. The following three special words have been added to the vocabulary: – : Used to fill in shorter sentences. – : “begin of sentence”, a special word indicating the beginning of a sentence. – : “end of sentence”, a special word indicating the end of a sentence.

Note: In actual tasks, it may be necessary to use (or) special words to represent words that do not appear in the vocabulary.

en_vocab = {}

cn_vocab = {}

# create special token for pad, begin of sentence, end of sentence

en_vocab[‘<pad>’], en_vocab[‘<bos>’], en_vocab[‘<eos>’] = 0, 1, 2

cn_vocab[‘<pad>’], cn_vocab[‘<bos>’], cn_vocab[‘<eos>’] = 0, 1, 2

en_idx, cn_idx = 3, 3

for en, cn in filtered_pairs:

for w in en:

if w not in en_vocab:

en_vocab[w] = en_idx

en_idx += 1

for w in cn:

if w not in cn_vocab:

cn_vocab[w] = cn_idx

cn_idx += 1

print(len(list(en_vocab)))

print(len(list(cn_vocab)))

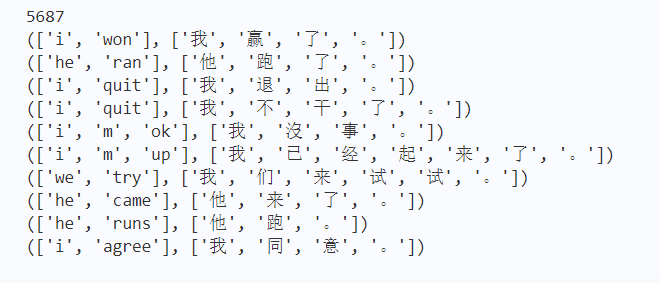

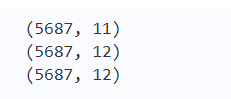

The output result is shown in Figure 4 below:

(4) Create a padding dataset

Next, according to the vocabulary, we will create an actual dataset organized in numpy array for training. – All sentences are supplemented into sentences of the same length. – For English sentences (source language), we reverse them, which results in better translation. – The created padded_cn_label_sents is the target of the prediction during the training process, that is, each current word in Chinese to predict what the next word is.

padded_en_sents = []

padded_cn_sents = []

padded_cn_label_sents = []

for en, cn in filtered_pairs:

# reverse source sentence

padded_en_sent = en + [‘<eos>’] + [‘<pad>’] * (MAX_LEN – len(en))

padded_en_sent.reverse()

padded_cn_sent = [‘<bos>’] + cn + [‘<eos>’] + [‘<pad>’] * (MAX_LEN – len(cn))

padded_cn_label_sent = cn + [‘<eos>’] + [‘<pad>’] * (MAX_LEN – len(cn) + 1)

padded_en_sents.append([en_vocab[w] for w in padded_en_sent])

padded_cn_sents.append([cn_vocab[w] for w in padded_cn_sent])

padded_cn_label_sents.append([cn_vocab[w] for w in padded_cn_label_sent])

train_en_sents = np.array(padded_en_sents)

train_cn_sents = np.array(padded_cn_sents)

train_cn_label_sents = np.array(padded_cn_label_sents)

print(train_en_sents.shape)

print(train_cn_sents.shape)

print(train_cn_label_sents.shape)

The output result is shown in Figure 5 below:

2. Network construction

We will create a model structure of the Encoder-AttentionDecoder architecture to complete machine translation tasks. First we will set some necessary parameters used in the network structure.

embedding_size = 128

hidden_size = 256

num_encoder_lstm_layers = 1

en_vocab_size = len(list(en_vocab))

cn_vocab_size = len(list(cn_vocab))

epochs = 20

batch_size = 16

(1), Encoder part

In the part of the encoder, we build a network that encodes the source language by connecting an LSTM after finding the Embedding. In addition to LSTM, the API of Flying Paddle’s RNN series also provides SimleRNN and GRU for use. At the same time, reverse RNN, bidirectional RNN, multi-layer RNN and other forms can also be used. You can also set whether to perform dropout processing on the middle layer of the multi-layer RNN through the dropout parameter to prevent overfitting.

In addition to using sequence-to-sequence RNN operations, you can also more flexibly create single-step RNN calculations through APIs such as SimpleRNN, GRUCell, and LSTMCell, and even implement your own RNN calculation unit by inheriting RNNCellBase.

# encoder: simply learn representation of source sentence

class Encoder(paddle.nn.Layer):

def __init__(self):

super(Encoder, self).__init__()

self.emb = paddle.nn.Embedding(en_vocab_size, embedding_size,)

self.lstm = paddle.nn.LSTM(input_size=embedding_size,

hidden_size=hidden_size,

num_layers=num_encoder_lstm_layers)

def forward(self, x):

x = self.emb(x)

x, (_, _) = self.lstm(x)

return x

(2), Decoder part

In the decoder part, we complete the decoding through an LSTM that removes the attention mechanism, which is the simplest encoder-decoder model architecture.

Single-step LSTM: In the implementation of the decoder, we also use LSTM. The difference from the Encoder part is that the following code only allows LSTM to calculate forward once each time. The overall recurrent part is done within the training loop.

For the first contact with such a network structure, the following code may be a little complicated to understand. You can better understand it by inserting and printing the shape of each tensor at different steps.

# only move one step of LSTM,

# the recurrent loop is implemented inside training loop

class Decoder(paddle.nn.Layer):

def __init__(self):

super(Decoder, self).__init__()

self.emb = paddle.nn.Embedding(cn_vocab_size, embedding_size)

self.lstm = paddle.nn.LSTM(input_size=embedding_size + hidden_size,

hidden_size=hidden_size)

# for computing output logits

self.outlinear =paddle.nn.Linear(hidden_size, cn_vocab_size)

def forward(self, x, previous_hidden, previous_cell, encoder_outputs):

x = self.emb(x)

#encoder_outputs 16*11*256

context_vector = paddle.sum(encoder_outputs, 1)

#context_vector 16*1*256

context_vector = paddle.unsqueeze(context_vector, 1)

#lstm_input 16*1*384

lstm_input = paddle.concat((x, context_vector), axis=-1)

# LSTM requirement to previous hidden/state:

# (number_of_layers * direction, batch, hidden)

previous_hidden = paddle.transpose(previous_hidden, [1, 0, 2])

previous_cell = paddle.transpose(previous_cell, [1, 0, 2])

x, (hidden, cell) = self.lstm(lstm_input, (previous_hidden, previous_cell))

# change the return to (batch, number_of_layers * direction, hidden)

hidden = paddle.transpose(hidden, [1, 0, 2])

cell = paddle.transpose(cell, [1, 0, 2])

output = self.outlinear(hidden)

output = paddle.squeeze(output)

return output, (hidden, cell)

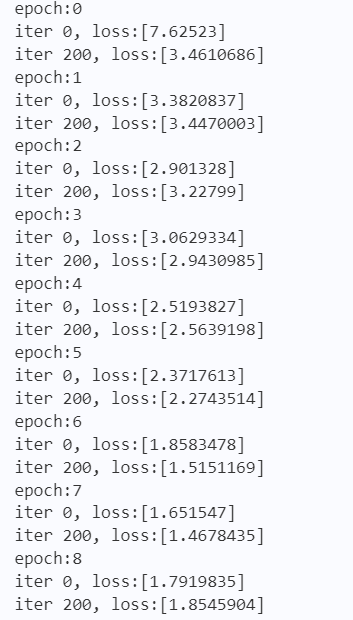

3. Model training

Next we start training the model.

Before the start of each epoch, we randomly shuffle the training data.

We implement the recurrent loop during decoding here by calling atten_decoder multiple times.

Teacher forcing strategy: Every time we decode the next word, we give the real word in the training data as the input for predicting the next word. Correspondingly, you can also try to use the result predicted by the model as the input of the next word. (or a mix of them)

encoder = Encoder()

decoder = Decoder()

opt = paddle.optimizer.Adam(learning_rate=0.001,

parameters=encoder.parameters()+decoder.parameters())

for epoch in range(epochs):

print(“epoch:{}”.format(epoch))

# shuffle training data

perm = np.random.permutation(len(train_en_sents))

train_en_sents_shuffled = train_en_sents[perm]

train_cn_sents_shuffled = train_cn_sents[perm]

train_cn_label_sents_shuffled = train_cn_label_sents[perm]

for iteration in range(train_en_sents_shuffled.shape[0] // batch_size):

x_data = train_en_sents_shuffled[(batch_size*iteration):(batch_size*(iteration+1))]

sent = paddle.to_tensor(x_data)

en_repr = encoder(sent)

x_cn_data = train_cn_sents_shuffled[(batch_size*iteration):(batch_size*(iteration+1))]

x_cn_label_data = train_cn_label_sents_shuffled[(batch_size*iteration):(batch_size*(iteration+1))]

# shape: (batch, num_layer(=1 here) * num_of_direction(=1 here), hidden_size)

hidden = paddle.zeros([batch_size, 1, hidden_size])

cell = paddle.zeros([batch_size, 1, hidden_size])

loss = paddle.zeros([1])

# the decoder recurrent loop mentioned above

for i in range(MAX_LEN + 2):

cn_word = paddle.to_tensor(x_cn_data[:,i:i+1])

cn_word_label = paddle.to_tensor(x_cn_label_data[:,i])

logits, (hidden, cell) = decoder(cn_word, hidden, cell, en_repr)

step_loss = F.cross_entropy(logits, cn_word_label)

loss += step_loss

loss = loss / (MAX_LEN + 2)

if(iteration % 200 == 0):

print(“iter {}, loss:{}”.format(iteration, loss.numpy()))

loss.backward()

opt.step()

opt.clear_grad()

The output result is shown in Figure 6 below:

4. Using models for machine translation

Depending on the computing device you are using, the above training process may take varying amounts of time. (On a Mac laptop, it takes about 15~20 minutes) After completing the above model training, we can get a machine translation model that can translate from English to Chinese. Next, we use this model to complete the actual machine translation through a greedy search. (In actual tasks, you may need to use the beam search algorithm to improve the effect)

encoder.eval()

decoder.eval()

num_of_exampels_to_evaluate = 10

indices = np.random.choice(len(train_en_sents), num_of_exampels_to_evaluate, replace=False)

x_data = train_en_sents[indices]

sent = paddle.to_tensor(x_data)

en_repr = encoder(sent)

word = np.array(

[[cn_vocab[‘<bos>’]]] * num_of_exampels_to_evaluate

)

word = paddle.to_tensor(word)

hidden = paddle.zeros([num_of_exampels_to_evaluate, 1, hidden_size])

cell = paddle.zeros([num_of_exampels_to_evaluate, 1, hidden_size])

decoded_sent = []

for i in range(MAX_LEN + 2):

logits, (hidden, cell) = decoder(word, hidden, cell, en_repr)

word = paddle.argmax(logits, axis=1)

decoded_sent.append(word.numpy())

word = paddle.unsqueeze(word, axis=-1)

results = np.stack(decoded_sent, axis=1)

for i in range(num_of_exampels_to_evaluate):

en_input = ” “.join(filtered_pairs[indices[i]][0])

ground_truth_translate = “”.join(filtered_pairs[indices[i]][1])

model_translate = “”

for k in results[i]:

w = list(cn_vocab)[k]

if w != ‘<pad>’ and w != ‘<eos>’:

model_translate += w

print(en_input)

print(“true: {}”.format(ground_truth_translate))

print(“pred: {}”.format(model_translate))

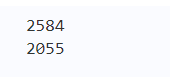

The output result is shown in Figure 7 below: