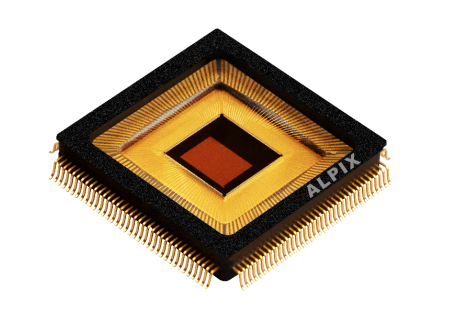

Alpsentek today released the industry’s first integrated bionic event vision sensor chip – ALPIX-Pilatus (ALPIX-P for short). Sensors designed for machine perception can be widely used in robots, smartphones, unmanned driving, drones, security and other scenarios.

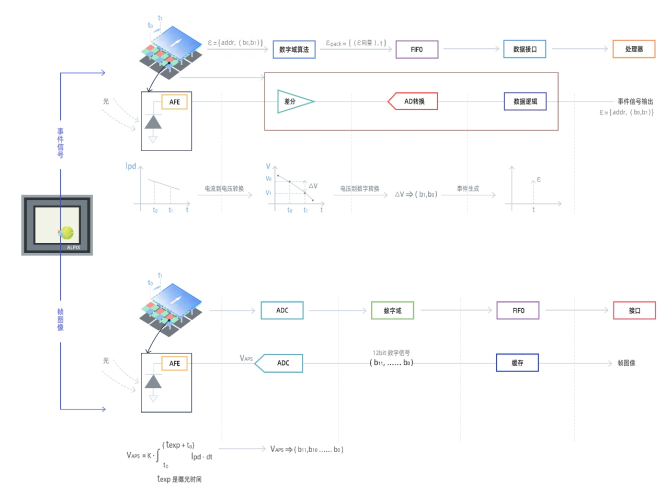

It is understood that ALPIX-P adopts the Hybrid Vision integrated bionic event visual sensing technology independently developed by Ruisi Zhixin, which integrates bionic event vision technology and traditional image sensing technology at the pixel level. The chip can quickly switch between the traditional image sensor mode and the bionic event camera mode, realizing the “two-in-one” function of the event camera and the CIS camera. In the image mode, ALPIX-P is a global exposure sensor with a maximum frame rate of 120 frames. Its image quality is consistent with that of the existing commercial CIS output, and it is compatible with the existing mature vision system. In the bionic event camera mode, ALPIX-P has the characteristics of ultra-high frame rate, high proportion of effective information, and large dynamic range.

“Hybrid Vision” technology is developed based on the team’s 10-year industrial accumulation in various high-end vision sensors and bionic event sensors, and the application of the most cutting-edge vision system design concepts and skills.

The ALPIX series chips based on this technology not only have the advantages of fast speed, low power consumption, less data volume, and HDR of event cameras, but also provide detailed static image information, which can meet the needs of computer vision for dynamic and static detection. Therefore, it is very suitable for “seeing” the machine.

Since the industrialization exploration of bionic event camera started in 2015, it has received attention from academic and industrial circles. Especially in recent years, international sensor giants, such as Samsung and Sony, have participated in the development and promotion of event cameras. There are two types of event cameras in the current industry: one is a sensor that only outputs event stream signals, such as the two event camera chips (EVS) recently released by Sony; the other is a sensor that can output event stream signals and light intensity grayscale data , but due to the pixel design and other reasons, the image generated based on the light intensity grayscale data is still comparable to the output image of the traditional CIS in terms of signal-to-noise ratio, dark light performance, fixed pattern noise (Fixed Pattern Noise), temperature stability, etc. There is a large room for improvement, so the application scenarios are limited.

The ALPIX-P released by Ruisi Zhixin this time is the world’s first truly integrated bionic event camera solution chip.

Compared with earlier event camera technology solutions, this fusion scheme has three advantages:

First of all, compared with the dual-camera solution of the existing bionic event camera that needs to cooperate with a traditional image camera in most scenarios, one chip of the ALPIX series can replace the functions of two chips, which not only has a system cost advantage, but also has more abundant data.

Secondly, solve the problem of dual-camera heterogeneous registration. In the event camera + traditional image sensor dual-camera system, due to the difference in the perspective of the two cameras, real-time registration of event camera data and image data is required. However, event data and image data are two completely different types of data, and spatial registration is difficult, which brings great difficulties to the implementation of the underlying fusion algorithm. The ALPIX series naturally solves the problem of difficult spatial registration because the two types of signals fused are generated by the same pixel.

Finally, ALPIX can output high-quality image signals, which are consistent with the image quality output by existing commercial CIS, and can be compatible with existing image processing algorithms and architectures.

In addition, based on the design of ALPIX-P, the subsequent ALPIX chips will adopt 3D stacking technology and unique chip bottom layer design, which has advantages in pixel size, resolution, cost, and performance in noise and dark light environments. Further improvement.

Ruisi Zhixin is a new vision sensor chip provider, which has received investment from Haikang, Lenovo, Sunny, HKUST Xunfei, Zhongke Chuangxing, and Yaotu Capital. The team comes from Cambridge University, Zurich Federal Institute of Technology (ETH), Zhejiang University and other world-renowned universities, and has working experience in internationally renowned companies such as CSEM, NXP, ARM, Freescale, Intel, and Magic Leap. The core members of the team have 15 years of technical experience in the field of image sensors. It is a global technology company with R&D centers in Beijing, Nanjing, Shenzhen and Switzerland.